Talking Tree

AI-powered companionship for personal and interactive growth.

2022-2023

Summary

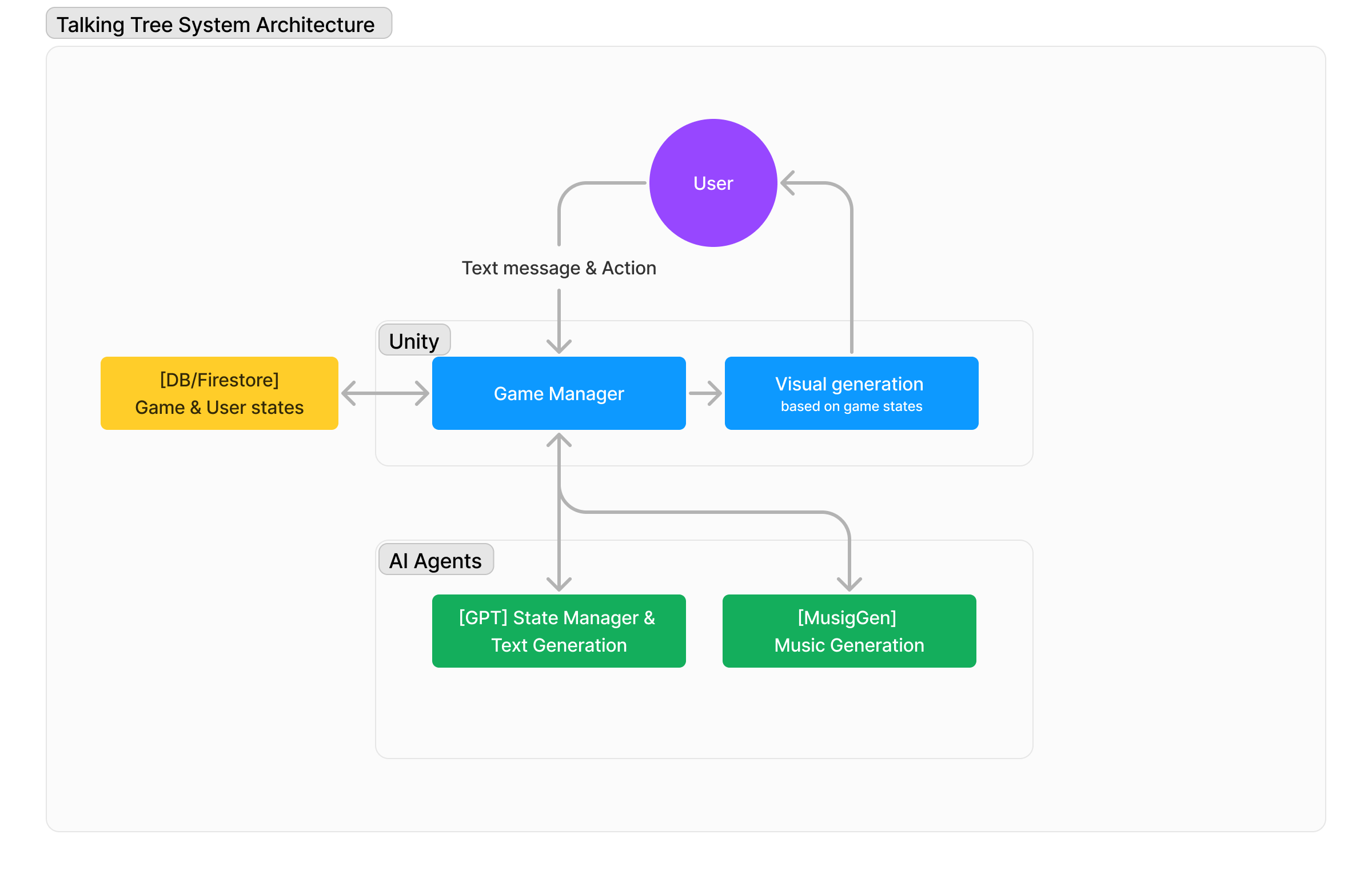

"Talking Tree" is a proof-of-concept product demo aimed at exploring the potential of generative AI models within 3D interactive environments, particularly in educational and psychological companionship contexts. Users nurture a seed into a tree within the mobile app, engaging in conversations and growing alongside it; the tree will then alter its growth pattern in response to these interactions. This project utilizes LLM (GPT-4) for text generation and state control, and MusicGen for music generation. Visually, parameters controlled by GPT-4 create diverse representations of the tree to reflect the interaction between the tree and the user.

LLMs hold tremendous potential in educational and psychological therapeutic applications, with many academic and commercial projects already exploring this area. "Talking Tree" primarily showcases the combined effects of generative 3D environments and music. Further exploration depends on collaboration with professionals in education and psychology.

Visual Generation

Current LLMs have limited spatial awareness in 3D, but leveraging their narrative and parameter control capabilities, we've integrated them with pre-written procedural visual programs. This integration allows direct control over tree growth parameters, yielding plausible outcomes. Additionally, LLMs can manipulate basic environmental elements like daylight and weather changes.

Music Generation

In our tests, we initially attempted direct MIDI audio generation by GPT-4, aiming for an integration of text and music by the Agent. However, the results were somewhat unstable, suggesting a need for further fine-tuning with musical data. Subsequently, we used GPT-4 to generate text prompts for Meta's Text-2-Music model, MusicGen, to create music, which flawlessly accomplished the task.

Reflection

Currently at the proof-of-concept stage, "Talking Tree" demonstrates the potential of generative AI to fully control visuals, sound, and text in small-scale virtual environments, creating personalized experiences and real-time, intimate interactions with users. The processing speed of LLMs remains a bottleneck for real-time interaction and intimacy under current technology. Further experiments with multiple small-scale models running in parallel could be considered.

Evaluating this virtual companion app's psychological and educational benefits requires further user testing and collaboration with professionals. However, we believe personalized virtual scenarios and AI companions will be beneficial. In extended applications, imagine VR educational settings where interactive 3D environments rapidly adapt to teachers' or students' learning states, potentially providing significant assistance in teaching.